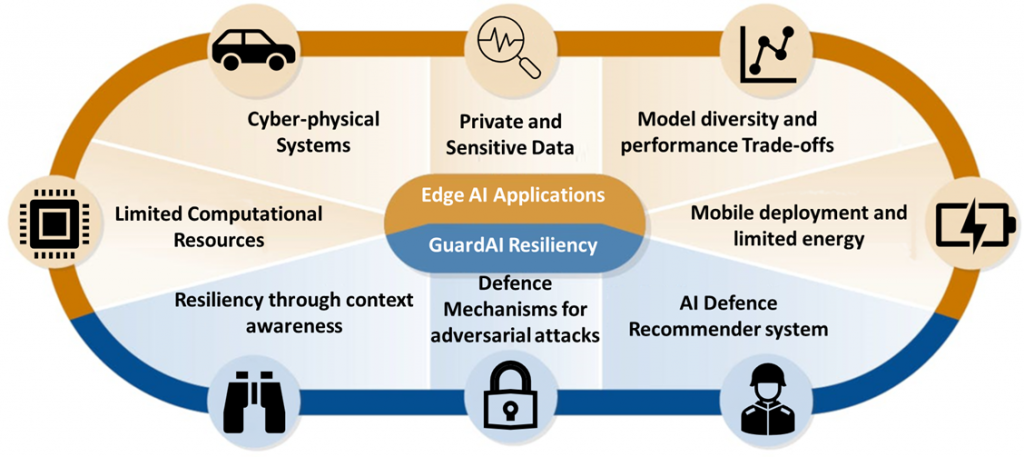

GuardAI is dedicated to tackling the vital challenges in strengthening the resilience and security of Artificial Intelligence (AI) systems at the edge. Emphasis is placed on high-stakes domains where these systems, including drones or Unmanned Aerial Vehicles (UAVs), connected and autonomous vehicles (CAVs), and network edge infrastructure, are becoming widespread and will play a pivotal role in making crucial decisions. These cutting-edge applications heavily rely on real-time decision-making and the processing of sensitive data, rendering them susceptible to various security threats and adversarial attacks.

Goal

GuardAI’s goal is to develop the next generation of resilient AI algorithms tailored for edge applications. In pursuit of this goal, GuardAI will engage in extensive research activities with the following aims to:

- Develop innovative solutions to ensure the integrity, security, and resilience of these systems, ultimately fostering trust and accelerating the safe adoption of AI-driven technologies.

- Integrate context indicators and holistic situational understanding into AI algorithms, enabling systems to adapt and make informed decisions in dynamic environments.

- Collaborate with researchers, industry experts, government agencies, and AI practitioners to lay the groundwork for future certification schemes that promote the adoption of secure AI technology across various domains.

Ultimately GuardAI is committed to developing cutting-edge, secure, and robust, solutions tailored to the specific needs of edge AI safeguarding critical infrastructure and systems.

Objectives

- Identify characteristics for AI at the edge to inform the security-by-design concept

- Improve robustness of AI algorithms running on edge/embedded systems against adversarial attacks

- Enhance resiliency of AI at the edge by leveraging auxiliary contextual information

- Establish a path towards certification of security-compliant edge AI algorithms

- Raise awareness and share knowledge with end users and decision makers on resilient AI

Approach

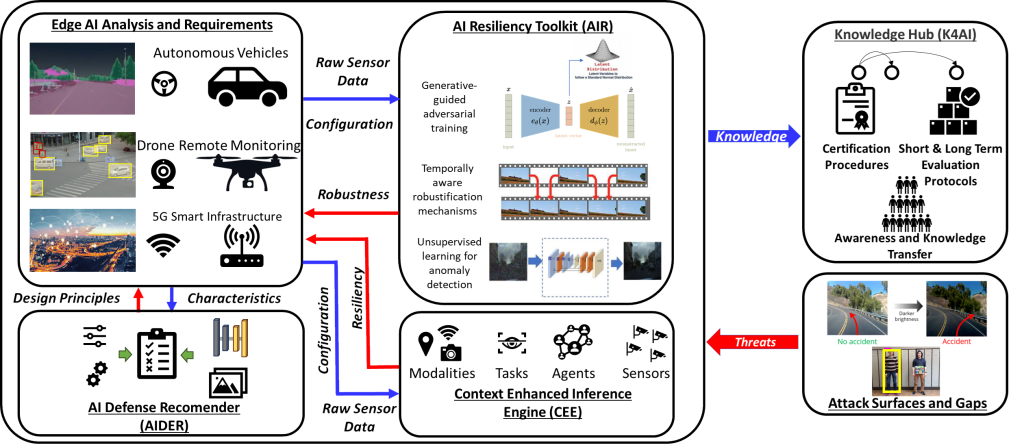

GuardAI, comprises multiple activities aimed at determining attack surfaces and identifying potential vulnerabilities and gaps in AI systems. In this context, existing attack vectors and research gaps will be identified, with the existing limitations informing the development of approaches, algorithms, and system optimizations. At the application-level, purpose-build context embedding mechanisms will be developed that will allow an edge AI system to exploit various context indicators such as location, mapping, multi-sensory orchestrations, and multi-agent environments to enrich its reasoning capabilities. The context capabilities will change depending on the edge AI application configuration and requirements. Using the raw sensory data available in each application, domain tailored solutions will be developed to enable machine and deep learning for perception and analytics to operate in the presence of adversarial attacks so that the overall operation can be performed in a trusted way and in a resilient manner.

These approaches will employ unsupervised learning for anomaly detection, temporally aware robustification mechanisms, and generative-guided adversarial training. The main research work encompassing context information, situational awareness, and novel algorithms and tools for adversarial robustness will be tailored for use in edge AI applications for drones, CAVs, and edge network infrastructure. These application domains encapsulate many challenges of AI at the edge applications such as, real-time processing, sensitive data, limited resources, and mobile deployment with limited energy. Consequently, due to the complexity of designing tailored defence mechanisms for edge AI systems, an automated recommender approach will be designed to encapsulate the security-by-design concept.

The knowledge accumulated by the consortium expertise and the novel knowledge that will be created will be digitized in a knowledge hub specialized for resiliency and robustification. Through this knowledge hub, GuardAI will derive evaluation protocols and standardized metrics that offer robustness guarantees for AI systems. These metrics will serve as means to assess the effectiveness of the developed security mechanisms and provide confidence in the reliability and safety of edge AI applications. Furthermore, the knowledge hub will act as the main medium for active engagement with the actors, enabling the creation of a wide network of experts, dialogue amongst stakeholders with aligned interests and capturing and diffusing of existing and new knowledge.